DATABASE

How do we study

The materials and data provided on this page are developed and collected for research conducted in our lab. They are made available exclusively for non-commercial, research purposes. Comprehensive explanations and further information can be found in the accompanying paper(s) listed under ‘citation’. For any inquiries, please feel free to reach out to DongWon Oh directly via email (doh [at] nus [dot] edu [dot] sg).

Generic tools for stimulus generation and analysis (e.g., FaceGen Bin Tools) can be found on DongWon Oh’s github page (link below).

Faces Paired with Clothes of Various Apparent Economic Statuses

This image set entails 600+ male person images and visual mask images as well as 74 clothing-only images. These images vary in the upper-body clothing type, rated as appearing either “expensive” or “cheap” by a group of human raters (that is, status impressions have been verified by human ratings to be successfully manipulated via clothing as intended). Sample images below do not show faces for privacy. Actual stimuli entail the faces. The stimuli come with ratings of the person images on competence impressions. Faces are not shown here in the sample image or privacy reasons.

citation:

Oh, D., Shafir, E., & Todorov, A. (2020). Economic status cues from clothes affect perceived competence from faces. Nature Human Behaviour, 4, 287–293. doi:10.1038/s41562-019-0782-4

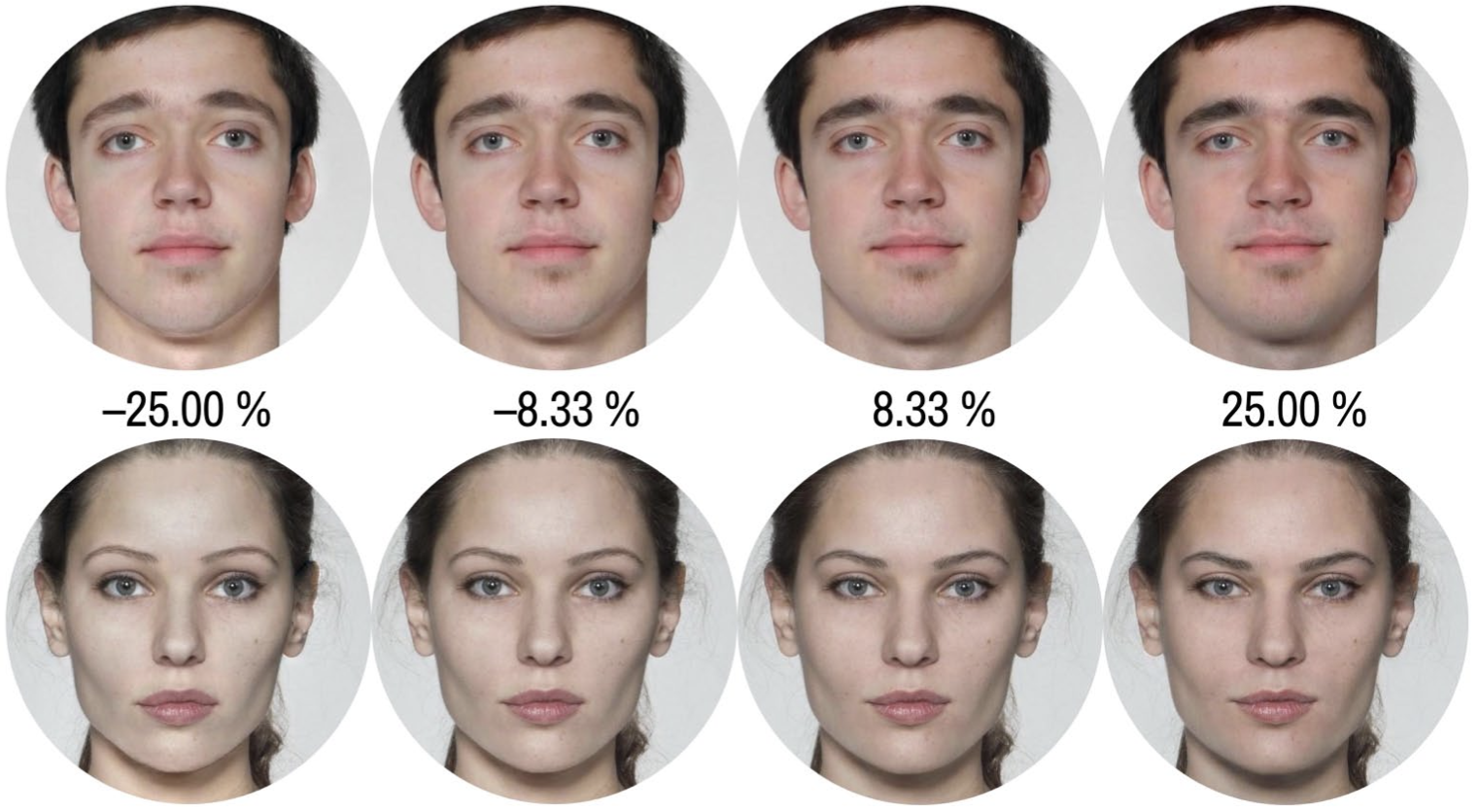

Faces Varying on Trustworthiness and Dominance Impressions

This image set entails 1,400 synthetic (25 females, 25 males) and 1,400 real-life images of individuals (25 females, 25 males) that were made to appear “trustworthy” (or “untrustworthy”), or “dominant” (or “submissive”) to various degrees. These impressions have been verified by human ratings. That is, the impressions were successfully manipulated as intended. Both male and female face images are available for both synthetic and real-life images. Synthetic images only consist of White faces. Real-life images consist of racially diverse individuals (self-identified White, Black, Asian, Latinx). The face manipulation was done using data-driven, statistical models of gender-specific trustworthiness impressions and gender-specific dominance impressions. The stimuli come with ratings of the person images.

citation:

Oh, D., Dotsch, R., Porter, J., & Todorov, A. (2020). Gender biases in impressions from faces: Empirical studies and computational models. Journal of Experimental Psychology: General, 149(2), 323-342. doi:10.1037/xge0000638

Faces Varying on Competence Impressions without the Attractiveness Halo

This image set entails 160 real-life images (10 female, 10 male, all Caucasian) and 525 synthetic faces (25 faces, some female-appearing after manipulation) that

were made to appear competent (or incompetent) to various degrees. Impressions have been verified by human ratings to be successfully manipulated. Importantly, the set consists of three subsets of images. Faces of multiple identities

were varied by either of 3 face models:

Model 1. Original Competence Impression Model

Model 2. Difference (Subtraction) Competence Model (Competence – Attractiveness)

Model 3. Orthogonal Competence Model (Competence ⟂ Attractiveness)

Importantly, Model no. 2 and 3 vary person images on competence impressions while not relying on changes in facial attractiveness. The stimuli come with ratings of the individuals on competence, attractiveness, confidence, and

masculinity.

This image set is useful when one tests the effect of face-based competence impressions on behavior while controlling for attractiveness’s impact on competence impressions.

citation:

Oh, D., Buck, E. A., & Todorov, A. (2019). Revealing hidden gender biases in competence impressions of faces. Psychological Science, 30(1), 65-79. doi:10.1177/0956797618813092

Faces Varying on Trustworthiness Impressions without Attractiveness Halo

This image set entails 525 synthetic images of individuals (25 male, but some female-appearing after manipulation) that were made to appear trustworthy (or untrustworthy) to various degrees. Impressions have been verified by human ratings to be successfully manipulated. Faces of multiple identities were varied by either of 3 face models:

Model 1. Original Trustworthiness Impression Model

Model 2. Subtraction Trustworthiness Model (Trustworthiness – Attractiveness)

Model 3. Orthogonal Trustworthiness Model (Trustworthiness ⟂ Attractiveness)

Importantly, Model no. 2 and 3 vary person images on trustworthiness impressions while not relying on changes in facial attractiveness. The stimuli come with ratings of the individuals on trustworthiness, attractiveness,

approachability, and emotional expression (angry–happy).

This image set is useful when one tests the effect of face-based trustworthiness impressions on behavior while controlling for attractiveness’s impact on trustworthiness impressions.

citation:

Oh, D., Wedel, N., Labbree, B., & Todorov, A. (in press). Data-driven models of face-based trustworthiness judgments unconfounded by attractiveness. Perception.

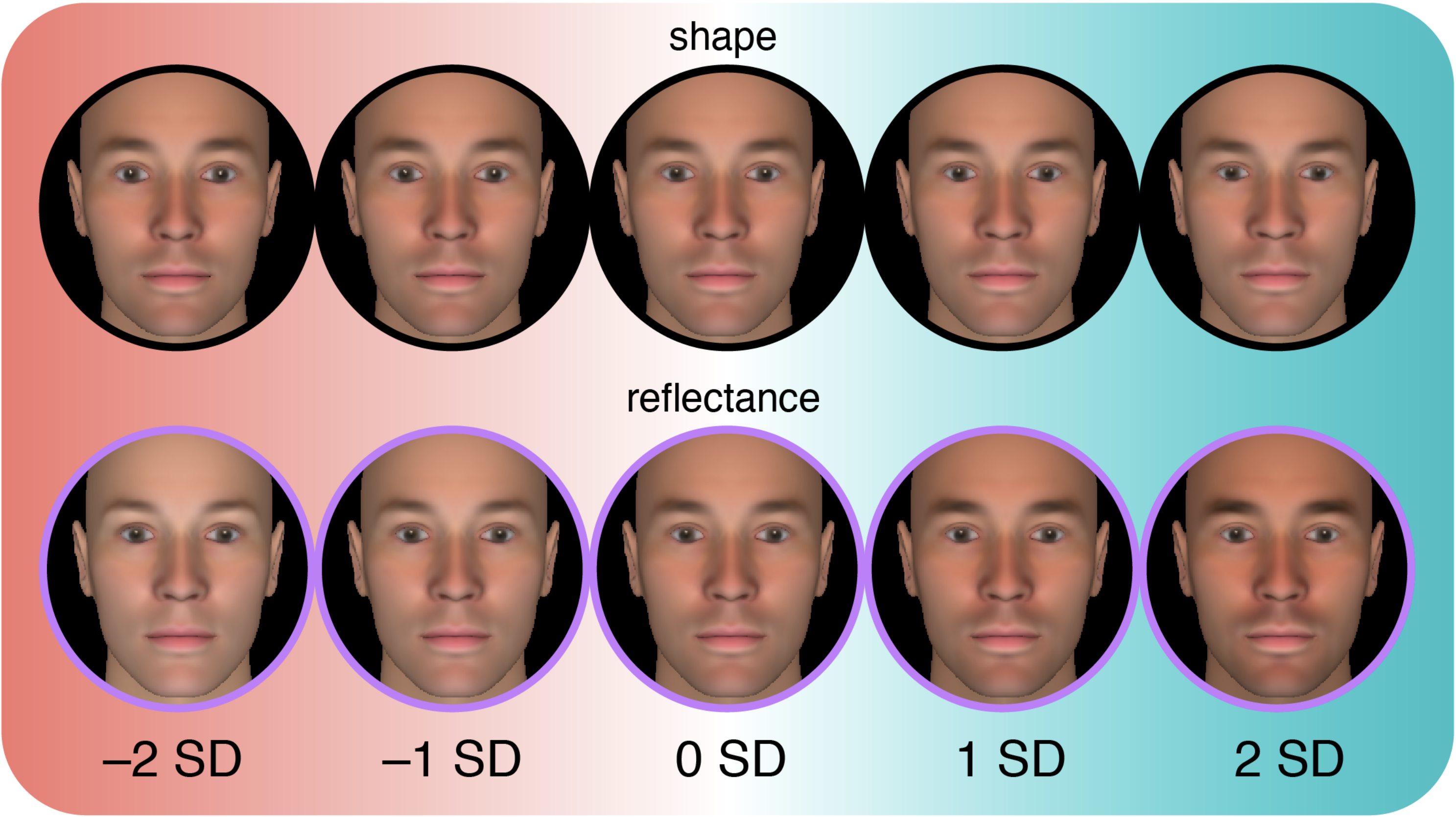

Faces Varying on Various Facial Attractiveness Cues

This image set consists of 76 synthetic male face images that exhibit variations in attractiveness cues. Specifically, the variations occur in three different categories of facial information: (1) face shape alone, (2) face reflectance alone, or (3) both face shape and reflectance.

citation:

Oh, D., Grant-Villegas, N., & Todorov, A. (2020). The eye wants what the heart wants: Females’ preference in male faces are related to partner personality preference.

Journal of Experimental Psychology: Human Perception and Performance, 46(11), 1328–1343. doi:10.1037/xhp0000858

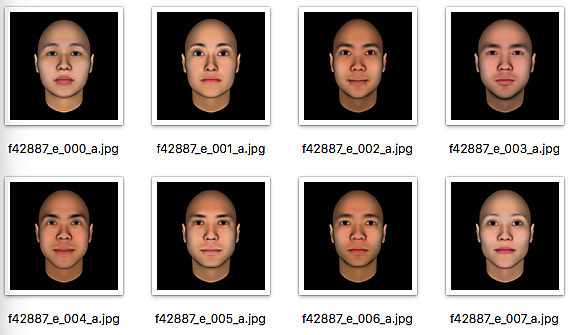

300 Synthetic East Asian Faces

This image set entails 300 synthetic East Asian face images. The 300 images are East Asian “twins” of 300 Caucasian faces in Oosterhof & Todorov (2008). How the original 300 Caucasian images were generated is described in the 2008

paper. In this image set, the FaceGen parameter coordinates of the 300 random Caucasian FaceGen faces are shifted so that the coordinates of the face images center around the average coordinates of the East Asian face in the FaceGen

model. The center of the East Asian Faces was calculated from the actual East Asian faces, measured via 3d laser scan. This shift in the coordinates essentially makes the faces on average appear more East Asian.

This image set can be useful for example when one builds a data-driven face evaluation model of Asian faces (e.g., a face model of trustworthiness judgement). See Oosterhof & Todorov (2008 Proceedings of the National Academy of Sciences of the USA)

and Todorov & Oosterhof (2011 IEEE Signal Processing Magazine) for details.

Citation:

Oh, D., Wang, S., & Todorov, A. (2018). 300 random Asian faces. Figshare. Retrieved from https://figshare.com/articles/dataset/300_Random_Asian_Faces/7361270.